The Art of Conversation: Why AI Needs to Learn How to Debate

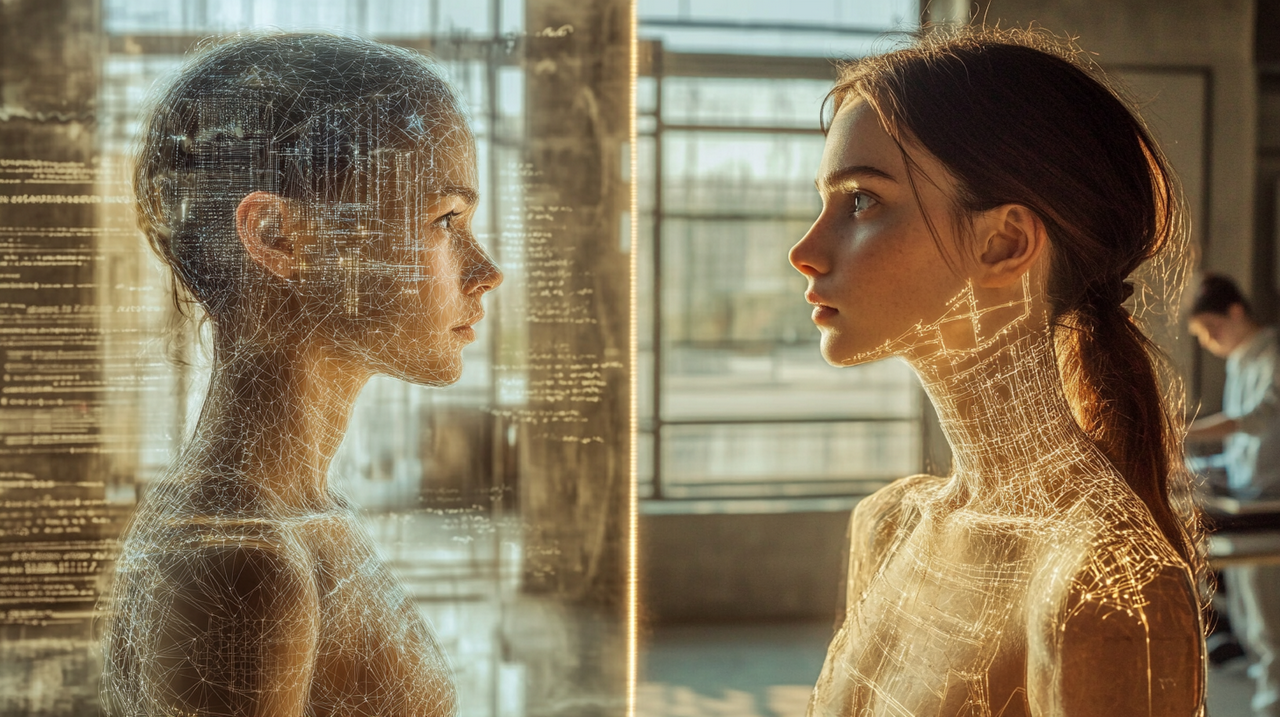

In the gleaming halls of tech conferences, artificial intelligence systems demonstrate remarkable feats—diagnosing diseases, predicting market trends, composing symphonies. Yet when pressed to explain their reasoning, these digital minds often fall silent, or worse, offer explanations as opaque as the black boxes they're meant to illuminate. The future of explainable AI isn't just about making machines more transparent; it's about teaching them to argue, to engage in the messy, iterative process of human reasoning through dialogue. We don't need smarter machines—we need better conversations.

The Silent Treatment: Why Current AI Explanations Fall Short

The landscape of explainable artificial intelligence has evolved dramatically over the past decade, yet a fundamental disconnect persists between what humans need and what current systems deliver. Traditional XAI approaches operate like academic lecturers delivering monologues to empty auditoriums—providing static explanations that assume perfect understanding on the first pass. These systems generate heat maps highlighting important features, produce decision trees mapping logical pathways, or offer numerical confidence scores that supposedly justify their conclusions. Yet they remain fundamentally one-directional, unable to engage with the natural human impulse to question, challenge, and seek clarification through dialogue.

This limitation becomes particularly stark when considering how humans naturally process complex information. We don't simply absorb explanations passively; we interrogate them. We ask follow-up questions, challenge assumptions, and build understanding through iterative exchanges. When a doctor explains a diagnosis, patients don't simply nod and accept; they ask about alternatives, probe uncertainties, and seek reassurance about treatment options. When a financial advisor recommends an investment strategy, clients engage in back-and-forth discussions, exploring scenarios and testing the logic against their personal circumstances.

Current AI systems, despite their sophistication, remain trapped in a paradigm of explanation without engagement. They can tell you why they made a decision, but they cannot defend that reasoning when challenged, cannot clarify when misunderstood, and cannot adapt their explanations to the evolving needs of the conversation. This represents more than a technical limitation; it's a fundamental misunderstanding of how trust and comprehension develop between intelligent agents.

The core challenge of XAI is not purely technical but is fundamentally a human-agent interaction problem. Progress depends on understanding how humans naturally explain concepts to one another and building agents that can replicate these social, interactive, and argumentative dialogues. The consequences of this limitation extend far beyond user satisfaction. In high-stakes domains like healthcare, finance, and criminal justice, the inability to engage in meaningful dialogue about AI decisions can undermine adoption, reduce trust, and potentially lead to harmful outcomes. A radiologist who cannot question an AI's cancer detection reasoning, a loan officer who cannot explore alternative interpretations of credit risk assessments, or a judge who cannot probe the logic behind sentencing recommendations—these scenarios highlight the critical gap between current XAI capabilities and real-world needs.

The Dialogue Deficit: Understanding Human-AI Communication Needs

Research into human-centred explainable AI reveals a striking pattern: users consistently express a desire for interactive, dialogue-based explanations rather than static presentations. This isn't merely a preference; it reflects fundamental aspects of human cognition and communication. When we encounter complex information, our minds naturally generate questions, seek clarifications, and test understanding through interactive exchange. The absence of this capability in current AI systems creates what researchers term a “dialogue deficit”—a gap between human communication needs and AI explanation capabilities.

This deficit manifests in multiple ways across different user groups and contexts. Domain experts, such as medical professionals or financial analysts, often need to drill down into specific aspects of AI reasoning that relate to their expertise and responsibilities. They might want to understand why certain features were weighted more heavily than others, how the system would respond to slightly different inputs, or what confidence levels exist around edge cases. Meanwhile, end users—patients receiving AI-assisted diagnoses or consumers using AI-powered financial services—typically need higher-level explanations that connect AI decisions to their personal circumstances and concerns.

The challenge becomes even more complex when considering the temporal nature of understanding. Human comprehension rarely occurs in a single moment; it develops through multiple interactions over time. A user might initially accept an AI explanation but later, as they gain more context or encounter related situations, develop new questions or concerns. Current XAI systems cannot accommodate this natural evolution of understanding, leaving users stranded with static explanations that quickly become inadequate.

Furthermore, the dialogue deficit extends to the AI system's inability to gauge user comprehension and adjust accordingly. Human experts naturally modulate their explanations based on feedback—verbal and non-verbal cues that indicate confusion, understanding, or disagreement. They can sense when an explanation isn't landing and pivot to different approaches, analogies, or levels of detail. AI systems, locked into predetermined explanation formats, cannot perform this crucial adaptive function.

The research literature increasingly recognises that effective XAI must bridge not just the technical gap between AI operations and human understanding, but also the social gap between how humans naturally communicate and how AI systems currently operate. This recognition has sparked interest in more dynamic, conversational approaches to AI explanation, setting the stage for the emergence of argumentative conversational agents as a potential solution. The evolution of conversational agents is moving from reactive—answering questions—to proactive. Future agents will anticipate the need for explanation and engage users without being prompted, representing a significant refinement in their utility and intelligence.

Enter the Argumentative Agent: A New Paradigm for AI Explanation

The concept of argumentative conversational agents signals a philosophical shift in how we approach explainable AI. Rather than treating explanation as a one-way information transfer, this paradigm embraces the inherently dialectical nature of human reasoning and understanding. Argumentative agents are designed to engage in reasoned discourse about their decisions, defending their reasoning while remaining open to challenge and clarification.

At its core, computational argumentation provides a formal framework for representing and managing conflicting information—precisely the kind of complexity that emerges in real-world AI decision-making scenarios. Unlike traditional explanation methods that present conclusions as fait accompli, argumentative systems explicitly model the tensions, trade-offs, and uncertainties inherent in their reasoning processes. This transparency extends beyond simply showing how a decision was made to revealing why alternative decisions were rejected and under what circumstances those alternatives might become preferable.

The power of this approach becomes evident when considering the nature of AI decision-making in complex domains. Medical diagnosis, for instance, often involves weighing competing hypotheses, each supported by different evidence and carrying different implications for treatment. A traditional XAI system might simply highlight the features that led to the most probable diagnosis. An argumentative agent, by contrast, could engage in a dialogue about why other diagnoses were considered and rejected, how different pieces of evidence support or undermine various hypotheses, and what additional information might change the diagnostic conclusion.

This capability to engage with uncertainty and alternative reasoning paths addresses a critical limitation of current XAI approaches. Many real-world AI applications operate in domains characterised by incomplete information, competing objectives, and value-laden trade-offs. Traditional explanation methods often obscure these complexities in favour of presenting clean, deterministic narratives about AI decisions. Argumentative agents, by embracing the messy reality of reasoning under uncertainty, can provide more honest and ultimately more useful explanations.

The argumentative approach also opens new possibilities for AI systems to learn from human feedback and expertise. When an AI agent can engage in reasoned discourse about its reasoning, it creates opportunities for domain experts to identify flaws, suggest improvements, and contribute knowledge that wasn't captured in the original training data. This transforms XAI from a one-way explanation process into a collaborative knowledge-building exercise that can improve both human understanding and AI performance over time. The most advanced progress involves moving beyond static explanations to frameworks that use “Collaborative Criticism and Refinement” where multiple agents engage in a form of argument to improve reasoning and outputs. This shows that the argumentative process itself is a key mechanism for progress.

The Technical Foundation: How Argumentation Enhances AI Reasoning

The integration of formal argumentation frameworks with modern AI systems, particularly large language models, ushers in a paradigm reconception with profound implications for explainable AI. Computational argumentation provides a structured approach to representing knowledge, managing conflicts, and reasoning about uncertainty—capabilities that complement and enhance the pattern recognition strengths of contemporary AI systems.

Traditional machine learning models, including sophisticated neural networks and transformers, excel at identifying patterns and making predictions based on statistical relationships in training data. However, they often struggle with explicit reasoning, logical consistency, and the ability to articulate the principles underlying their decisions. Argumentation frameworks address these limitations by providing formal structures for representing reasoning processes, evaluating competing claims, and maintaining logical coherence across complex decision scenarios.

The technical implementation of argumentative conversational agents typically involves multiple interconnected components. At the foundation lies an argumentation engine that can construct, evaluate, and compare different lines of reasoning. This engine operates on formal argument structures that explicitly represent claims, evidence, and the logical relationships between them. When faced with a decision scenario, the system constructs multiple competing arguments representing different possible conclusions and the reasoning pathways that support them.

The sophistication of modern argumentation frameworks allows for nuanced handling of uncertainty, conflicting evidence, and incomplete information. Rather than simply selecting the argument with the highest confidence score, these systems can engage in meta-reasoning about the quality of different arguments, the reliability of their underlying assumptions, and the circumstances under which alternative arguments might become more compelling. This capability proves particularly valuable in domains where decisions must be made with limited information and where the cost of errors varies significantly across different types of mistakes.

Large language models bring complementary strengths to this technical foundation. Their ability to process natural language, access vast knowledge bases, and generate human-readable text makes them ideal interfaces for argumentative reasoning systems. The intersection of XAI and LLMs is a dominant area of research, with efforts focused on leveraging the conversational power of LLMs to create more natural and accessible explanations for complex AI models. When integrated effectively, LLMs can translate formal argument structures into natural language explanations, interpret user questions and challenges, and facilitate the kind of fluid dialogue that makes argumentative agents accessible to non-technical users.

However, the integration of LLMs with argumentation frameworks also addresses some inherent limitations of language models themselves. While LLMs demonstrate impressive conversational abilities, they often lack the formal reasoning capabilities needed for consistent, logical argumentation. They may generate plausible-sounding explanations that contain logical inconsistencies, fail to maintain coherent positions across extended dialogues, or struggle with complex reasoning chains that require explicit logical steps. There is a significant risk of “overestimating the linguistic capabilities of LLMs,” which can produce fluent but potentially incorrect or ungrounded explanations. Argumentation frameworks provide the formal backbone that ensures logical consistency and coherent reasoning, while LLMs provide the natural language interface that makes this reasoning accessible to human users.

Consider a practical example: when a medical AI system recommends a particular treatment, an argumentative agent could construct formal arguments representing different treatment options, each grounded in clinical evidence and patient-specific factors. The LLM component would then translate these formal structures into natural language explanations that a clinician could understand and challenge. If the clinician questions why a particular treatment was rejected, the system could present the formal reasoning that led to that conclusion and engage in dialogue about the relative merits of different approaches.

Effective XAI requires that explanations be “refined with relevant external knowledge.” This is critical for moving beyond plausible-sounding text to genuinely informative and trustworthy arguments, especially in specialised domains like education which have “distinctive needs.”

Overcoming Technical Challenges: The Engineering of Argumentative Intelligence

The development of effective argumentative conversational agents requires addressing several significant technical challenges that span natural language processing, knowledge representation, and human-computer interaction. One of the most fundamental challenges involves creating systems that can maintain coherent argumentative positions across extended dialogues while remaining responsive to new information and user feedback.

Traditional conversation systems often struggle with consistency over long interactions, sometimes contradicting earlier statements or failing to maintain coherent viewpoints when faced with challenging questions. Argumentative agents must overcome this limitation by maintaining explicit representations of their reasoning positions and the evidence that supports them. This requires sophisticated knowledge management systems that can track the evolution of arguments throughout a conversation and ensure that new statements remain logically consistent with previously established positions.

The challenge of natural language understanding in argumentative contexts adds another layer of complexity. Users don't always express challenges or questions in formally organised ways; they might use colloquial language, implicit assumptions, or emotional appeals that require careful interpretation. Argumentative agents must be able to parse these varied forms of input and translate them into formal argumentative structures that can be processed by underlying reasoning engines. This translation process requires not just linguistic sophistication but also pragmatic understanding of how humans typically engage in argumentative discourse.

Knowledge integration presents another significant technical hurdle. Effective argumentative agents must be able to draw upon diverse sources of information—training data, domain-specific knowledge bases, real-time data feeds, and user-provided information—while maintaining awareness of the reliability and relevance of different sources. This requires sophisticated approaches to knowledge fusion that can handle conflicting information, assess source credibility, and maintain uncertainty estimates across different types of knowledge.

The Style vs Substance Trap

A critical challenge emerging in the development of argumentative AI systems involves distinguishing between genuinely useful explanations and those that merely sound convincing. This represents what researchers increasingly recognise as the “style versus substance” problem—the tendency for systems to prioritise eloquent delivery over accurate, meaningful content. The challenge lies in ensuring that argumentative agents can ground their reasoning in verified, domain-specific knowledge while maintaining the flexibility to engage in natural dialogue about complex topics.

The computational efficiency of argumentative reasoning represents a practical challenge that becomes particularly acute in real-time applications. Constructing and evaluating multiple competing arguments, especially in complex domains with many variables and relationships, can be computationally expensive. Researchers are developing various optimisation strategies, including hierarchical argumentation structures, selective argument construction, and efficient search techniques that can identify the most relevant arguments without exhaustively exploring all possibilities.

User interface design for argumentative agents requires careful consideration of how to present complex reasoning structures in ways that are accessible and engaging for different types of users. The challenge lies in maintaining the richness and nuance of argumentative reasoning while avoiding cognitive overload or confusion. This often involves developing adaptive interfaces that can adjust their level of detail and complexity based on user expertise, context, and expressed preferences.

The evaluation of argumentative conversational agents presents unique methodological challenges. Traditional metrics for conversational AI, such as response relevance or user satisfaction, don't fully capture the quality of argumentative reasoning or the effectiveness of explanation dialogues. Researchers are developing new evaluation frameworks that assess logical consistency, argumentative soundness, and the ability to facilitate user understanding through interactive dialogue. A significant challenge is distinguishing between a genuinely useful explanation (“substance”) and a fluently worded but shallow one (“style”). This has spurred the development of new benchmarks and evaluation methods to measure the true quality of conversational explanations.

A major trend is the development of multi-agent frameworks where different AI agents collaborate, critique, and refine each other's work. This “collaborative criticism” mimics a human debate to achieve a more robust and well-reasoned outcome. These systems can engage in formal debates with each other, with humans serving as moderators or participants in these AI-AI argumentative dialogues. This approach helps identify weaknesses in reasoning, explore a broader range of perspectives, and develop more robust conclusions through adversarial testing of different viewpoints.

The Human Factor: Designing for Natural Argumentative Interaction

The success of argumentative conversational agents depends not just on technical sophistication but on their ability to engage humans in natural, productive argumentative dialogue. This requires deep understanding of how humans naturally engage in reasoning discussions and the design principles that make such interactions effective and satisfying.

Human argumentative behaviour varies significantly across individuals, cultures, and contexts. Some users prefer direct, logical exchanges focused on evidence and reasoning, while others engage more effectively through analogies, examples, and narrative structures. Effective argumentative agents must be able to adapt their communication styles to match user preferences and cultural expectations while maintaining the integrity of their underlying reasoning processes.

Cultural sensitivity in argumentative design becomes particularly important as these systems are deployed across diverse global contexts. Different cultures have varying norms around disagreement, authority, directness, and the appropriate ways to challenge or question reasoning. For instance, Western argumentative traditions often emphasise direct confrontation of ideas and explicit disagreement, while many East Asian cultures favour more indirect approaches that preserve social harmony and respect hierarchical relationships. In Japanese business contexts, challenging a superior's reasoning might require elaborate face-saving mechanisms and indirect language, whereas Scandinavian cultures might embrace more egalitarian and direct forms of intellectual challenge.

These cultural differences extend beyond mere communication style to fundamental assumptions about the nature of truth, authority, and knowledge construction. Some cultures view knowledge as emerging through collective consensus and gradual refinement, while others emphasise individual expertise and authoritative pronouncement. Argumentative agents must be designed to navigate these cultural variations while maintaining their core functionality of facilitating reasoned discourse about AI decisions.

The emotional dimensions of argumentative interaction present particular design challenges. Humans often become emotionally invested in their viewpoints, and challenging those viewpoints can trigger defensive responses that shut down productive dialogue. Argumentative agents must be designed to navigate these emotional dynamics carefully, presenting challenges and alternative viewpoints in ways that encourage reflection rather than defensiveness. This requires sophisticated understanding of conversational pragmatics and the ability to frame disagreements constructively.

Trust building represents another crucial aspect of human-AI argumentative interaction. Users must trust not only that the AI system has sound reasoning capabilities but also that it will engage in good faith dialogue—acknowledging uncertainties, admitting limitations, and remaining open to correction when presented with compelling counter-evidence. This trust develops through consistent demonstration of intellectual humility and responsiveness to user input.

The temporal aspects of argumentative dialogue require careful consideration in system design. Human understanding and acceptance of complex arguments often develop gradually through multiple interactions over time. Users might initially resist or misunderstand AI reasoning but gradually develop appreciation for the system's perspective through continued engagement. Argumentative agents must be designed to support this gradual development of understanding, maintaining patience with users who need time to process complex information and providing multiple entry points for engagement with difficult concepts.

The design of effective argumentative interfaces also requires consideration of different user goals and contexts. A medical professional using an argumentative agent for diagnosis support has different needs and constraints than a student using the same technology for learning or a consumer seeking explanations for AI-driven financial recommendations. The system must be able to adapt its argumentative strategies and interaction patterns to serve these diverse use cases effectively.

The field is shifting from designing agents that simply respond to queries to creating “proactive conversational agents” that can initiate dialogue, offer unsolicited clarifications, and guide the user's understanding. This proactive capability requires sophisticated models of user needs and context, as well as the ability to judge when intervention or clarification might be helpful rather than intrusive.

From Reactive to Reflective: The Proactive Agent Revolution

The evolution of conversational AI is witnessing a paradigm shift from reactive systems that simply respond to queries to proactive agents that can initiate dialogue, offer unsolicited clarifications, and guide user understanding. This transformation represents one of the most significant developments in argumentative conversational agents, moving beyond the traditional question-and-answer model to create systems that can actively participate in reasoning processes.

Proactive argumentative agents possess the capability to recognise when additional explanation might be beneficial, even when users haven't explicitly requested it. They can identify potential points of confusion, anticipate follow-up questions, and offer clarifications before misunderstandings develop. This proactive capability requires sophisticated models of user needs and context, as well as the ability to judge when intervention or clarification might be helpful rather than intrusive.

The technical implementation of proactive behaviour involves multiple layers of reasoning about user state, context, and communication goals. These systems must maintain models of what users know, what they might be confused about, and what additional information could enhance their understanding. They must also navigate the delicate balance between being helpful and being overwhelming, providing just enough proactive guidance to enhance understanding without creating information overload.

In medical contexts, a proactive argumentative agent might recognise when a clinician is reviewing a complex case and offer to discuss alternative diagnostic possibilities or treatment considerations that weren't initially highlighted. Rather than waiting for specific questions, the agent could initiate conversations about edge cases, potential complications, or recent research that might influence decision-making. This proactive engagement transforms the AI from a passive tool into an active reasoning partner.

The development of proactive capabilities also addresses one of the fundamental limitations of current XAI systems: their inability to anticipate user needs and provide contextually appropriate explanations. Traditional systems wait for users to formulate specific questions, but many users don't know what questions to ask or may not recognise when additional explanation would be beneficial. Proactive agents can bridge this gap by actively identifying opportunities for enhanced understanding and initiating appropriate dialogues.

This shift from reactive to reflective agents embodies a new philosophy of human-AI collaboration where AI systems take active responsibility for ensuring effective communication and understanding. Rather than placing the entire burden of explanation-seeking on human users, proactive agents share responsibility for creating productive reasoning dialogues.

The implications of this proactive capability extend beyond individual interactions to broader patterns of human-AI collaboration. When AI systems can anticipate communication needs and initiate helpful dialogues, they become more integrated into human decision-making processes. This integration can lead to more effective use of AI capabilities and better outcomes in domains where timely access to relevant information and reasoning support can make significant differences.

However, the development of proactive argumentative agents also raises important questions about the appropriate boundaries of AI initiative in human reasoning processes. Systems must be designed to enhance rather than replace human judgement, offering proactive support without becoming intrusive or undermining human agency in decision-making contexts.

Real-World Applications: Where Argumentative AI Makes a Difference

The practical applications of argumentative conversational agents span numerous domains where complex decision-making requires transparency, accountability, and the ability to engage with human expertise. In healthcare, these systems are beginning to transform how medical professionals interact with AI-assisted diagnosis and treatment recommendations. Rather than simply accepting or rejecting AI suggestions, clinicians can engage in detailed discussions about diagnostic reasoning, explore alternative interpretations of patient data, and collaboratively refine treatment plans based on their clinical experience and patient-specific factors.

Consider a scenario where an AI system recommends a particular treatment protocol for a cancer patient. A traditional XAI system might highlight the patient characteristics and clinical indicators that led to this recommendation. An argumentative agent, however, could engage the oncologist in a discussion about why other treatment options were considered and rejected, how the recommendation might change if certain patient factors were different, and what additional tests or information might strengthen or weaken the case for the suggested approach. This level of interactive engagement not only improves the clinician's understanding of the AI's reasoning but also creates opportunities for the AI system to learn from clinical expertise and real-world outcomes.

Financial services represent another domain where argumentative AI systems demonstrate significant value. Investment advisors, loan officers, and risk managers regularly make complex decisions that balance multiple competing factors and stakeholder interests. Traditional AI systems in these contexts often operate as black boxes, providing recommendations without adequate explanation of the underlying reasoning. Argumentative agents can transform these interactions by enabling financial professionals to explore different scenarios, challenge underlying assumptions, and understand how changing market conditions or client circumstances might affect AI recommendations.

The legal domain presents particularly compelling use cases for argumentative AI systems. Legal reasoning is inherently argumentative, involving the construction and evaluation of competing claims based on evidence, precedent, and legal principles. AI systems that can engage in formal legal argumentation could assist attorneys in case preparation, help judges understand complex legal analyses, and support legal education by providing interactive platforms for exploring different interpretations of legal principles and their applications.

In regulatory and compliance contexts, argumentative AI systems offer the potential to make complex rule-based decision-making more transparent and accountable. Regulatory agencies often must make decisions based on intricate webs of rules, precedents, and policy considerations. An argumentative AI system could help regulatory officials understand how different interpretations of regulations might apply to specific cases, explore the implications of different enforcement approaches, and engage with stakeholders who challenge or question regulatory decisions.

The educational applications of argumentative AI extend beyond training future professionals to supporting lifelong learning and skill development. These systems can serve as sophisticated tutoring platforms that don't just provide information but engage learners in the kind of Socratic dialogue that promotes deep understanding. Students can challenge AI explanations, explore alternative viewpoints, and develop critical thinking skills through organised interactions with systems that can defend their positions while remaining open to correction and refinement.

In practical applications like robotics, the purpose of an argumentative agent is not just to explain but to enable action. This involves a dialogue where the agent can “ask questions when confused” to clarify instructions, turning explanation into a collaborative task-oriented process. This represents a shift from passive explanation to active collaboration, where the AI system becomes a genuine partner in problem-solving rather than simply a tool that provides answers.

The development of models like “TAGExplainer,” a system for translating graph reasoning into human-understandable stories, demonstrates that a key role for these agents is to act as storytellers. They translate complex, non-linear data structures and model decisions into a coherent, understandable narrative for the user. This narrative capability proves particularly valuable in domains where understanding requires grasping complex relationships and dependencies that don't lend themselves to simple explanations.

The Broader Implications: Transforming Human-AI Collaboration

The emergence of argumentative conversational agents signals a philosophical shift in the nature of human-AI collaboration. As these systems become more sophisticated and widely deployed, they have the potential to transform how humans and AI systems work together across numerous domains and applications.

One of the most significant implications involves the democratisation of access to sophisticated reasoning capabilities. Argumentative AI agents can serve as reasoning partners that help humans explore complex problems, evaluate different options, and develop more nuanced understanding of challenging issues. This capability could prove particularly valuable in educational contexts, where argumentative agents could serve as sophisticated tutoring systems that engage students in Socratic dialogue and help them develop critical thinking skills.

The potential for argumentative AI to enhance human decision-making extends beyond individual interactions to organisational and societal levels. In business contexts, argumentative agents could facilitate more thorough exploration of strategic options, help teams identify blind spots in their reasoning, and support more robust risk assessment processes. The ability to engage in formal argumentation with AI systems could lead to more thoughtful and well-reasoned organisational decisions.

From a societal perspective, argumentative AI systems could contribute to more informed public discourse by helping individuals understand complex policy issues, explore different viewpoints, and develop more nuanced positions on challenging topics. Rather than simply reinforcing existing beliefs, argumentative agents could challenge users to consider alternative perspectives and engage with evidence that might contradict their initial assumptions.

The implications for AI development itself are equally significant. As argumentative agents become more sophisticated, they create new opportunities for AI systems to learn from human expertise and reasoning. The interactive nature of argumentative dialogue provides rich feedback that could be used to improve AI reasoning capabilities, identify gaps in knowledge or logic, and develop more robust and reliable AI systems over time.

However, these transformative possibilities also raise important questions about the appropriate role of AI in human reasoning and decision-making. As argumentative agents become more persuasive and sophisticated, there's a risk that humans might become overly dependent on AI reasoning or abdicate their own critical thinking responsibilities. Ensuring that argumentative AI enhances rather than replaces human reasoning capabilities requires careful attention to system design and deployment strategies.

The development of argumentative conversational agents also has implications for AI safety and alignment. Systems that can engage in sophisticated argumentation about their own behaviour and decision-making processes could provide new mechanisms for ensuring AI systems remain aligned with human values and objectives. The ability to question and challenge AI reasoning through formal dialogue could serve as an important safeguard against AI systems that develop problematic or misaligned behaviours.

The collaborative nature of argumentative AI also opens possibilities for more democratic approaches to AI governance and oversight. Rather than relying solely on technical experts to evaluate AI systems, argumentative agents could enable broader participation in AI accountability processes by making complex technical reasoning accessible to non-experts through organised dialogue.

The transformation extends to how we conceptualise the relationship between human and artificial intelligence. Rather than viewing AI as a tool to be used or a black box to be trusted, argumentative agents position AI as a reasoning partner that can engage in the kind of intellectual discourse that characterises human collaboration at its best. This shift could lead to more effective human-AI teams and better outcomes in domains where complex reasoning and decision-making are critical.

Future Horizons: The Evolution of Argumentative AI

The trajectory of argumentative conversational agents points toward increasingly sophisticated systems that can engage in nuanced, context-aware reasoning dialogues across diverse domains and applications. Several emerging trends and research directions are shaping the future development of these systems, each with significant implications for the broader landscape of human-AI interaction.

Multimodal argumentation represents one of the most promising frontiers in this field. Future argumentative agents will likely integrate visual, auditory, and textual information to construct and present arguments that leverage multiple forms of evidence and reasoning. A medical argumentative agent might combine textual clinical notes, medical imaging, laboratory results, and patient history to construct comprehensive arguments about diagnosis and treatment options. This multimodal capability could make argumentative reasoning more accessible and compelling for users who process information differently or who work in domains where visual or auditory evidence plays crucial roles.

The integration of real-time learning capabilities into argumentative agents represents another significant development trajectory. Current systems typically operate with fixed knowledge bases and reasoning capabilities, but future argumentative agents could continuously update their knowledge and refine their reasoning based on ongoing interactions with users and new information sources. This capability would enable argumentative agents to become more effective over time, developing deeper understanding of specific domains and more sophisticated approaches to engaging with different types of users.

Collaborative argumentation between multiple AI agents presents intriguing possibilities for enhancing the quality and robustness of AI reasoning. Rather than relying on single agents to construct and defend arguments, future systems might involve multiple specialised agents that can engage in formal debates with each other, with humans serving as moderators or participants in these AI-AI argumentative dialogues. This approach could help identify weaknesses in reasoning, explore a broader range of perspectives, and develop more robust conclusions through adversarial testing of different viewpoints.

The personalisation of argumentative interaction represents another important development direction. Future argumentative agents will likely be able to adapt their reasoning styles, communication approaches, and argumentative strategies to individual users based on their backgrounds, preferences, and learning patterns. This personalisation could make argumentative AI more effective across diverse user populations and help ensure that the benefits of argumentative reasoning are accessible to users with different cognitive styles and cultural backgrounds.

The integration of emotional intelligence into argumentative agents could significantly enhance their effectiveness in human interaction. Future systems might be able to recognise and respond to emotional cues in user communication, adapting their argumentative approaches to maintain productive dialogue even when discussing controversial or emotionally charged topics. This capability would be particularly valuable in domains like healthcare, counselling, and conflict resolution where emotional sensitivity is crucial for effective communication.

Standards and frameworks for argumentative AI evaluation and deployment are likely to emerge as these systems become more widespread. Professional organisations, regulatory bodies, and international standards groups will need to develop guidelines for assessing the quality of argumentative reasoning, ensuring the reliability and safety of argumentative agents, and establishing best practices for their deployment in different domains and contexts.

The potential for argumentative AI to contribute to scientific discovery and knowledge advancement represents one of the most exciting long-term possibilities. Argumentative agents could serve as research partners that help scientists explore hypotheses, identify gaps in reasoning, and develop more robust theoretical frameworks. In fields where scientific progress depends on the careful evaluation of competing theories and evidence, argumentative AI could accelerate discovery by providing sophisticated reasoning support and helping researchers engage more effectively with complex theoretical debates.

The development of argumentative agents that can engage across different levels of abstraction—from technical details to high-level principles—will be crucial for their widespread adoption. These systems will need to seamlessly transition between discussing specific implementation details with technical experts and exploring broader implications with policy makers or end users, all while maintaining logical consistency and argumentative coherence.

The emergence of argumentative AI ecosystems, where multiple agents with different specialisations and perspectives can collaborate on complex reasoning tasks, represents another significant development trajectory. These ecosystems could provide more comprehensive and robust reasoning support by bringing together diverse forms of expertise and enabling more thorough exploration of complex problems from multiple angles.

Conclusion: The Argumentative Imperative

The development of argumentative conversational agents for explainable AI embodies a fundamental recognition that effective human-AI collaboration requires systems capable of engaging in the kind of reasoned dialogue that characterises human intelligence at its best. As AI systems become increasingly powerful and ubiquitous, the ability to question, challenge, and engage with their reasoning becomes not just desirable but essential for maintaining human agency and ensuring responsible AI deployment.

The journey from static explanations to dynamic argumentative dialogue reflects a broader evolution in our understanding of what it means for AI to be truly explainable. Explanation is not simply about providing information; it's about facilitating understanding through interactive engagement that respects the complexity of human reasoning and the iterative nature of comprehension. Argumentative conversational agents provide a framework for achieving this more sophisticated form of explainability by embracing the inherently dialectical nature of human intelligence.

The technical challenges involved in developing effective argumentative AI are significant, but they are matched by the potential benefits for human-AI collaboration across numerous domains. From healthcare and finance to education and scientific research, argumentative agents offer the possibility of AI systems that can serve as genuine reasoning partners rather than black-box decision makers. This transformation could enhance human decision-making capabilities while ensuring that AI systems remain accountable, transparent, and aligned with human values.

As we continue to develop and deploy these systems, the focus must remain on augmenting rather than replacing human reasoning capabilities. The goal is not to create AI systems that can out-argue humans, but rather to develop reasoning partners that can help humans think more clearly, consider alternative perspectives, and reach more well-founded conclusions. This requires ongoing attention to the human factors that make argumentative dialogue effective and satisfying, as well as continued technical innovation in argumentation frameworks, natural language processing, and human-computer interaction.

The future of explainable AI lies not in systems that simply tell us what they're thinking, but in systems that can engage with us in the messy, iterative, and ultimately human process of reasoning through complex problems together. Argumentative conversational agents represent a crucial step toward this future, offering a vision of human-AI collaboration that honours both the sophistication of artificial intelligence and the irreplaceable value of human reasoning and judgement.

The argumentative imperative is clear: as AI systems become more capable and influential, we must ensure they can engage with us as reasoning partners worthy of our trust and capable of earning our understanding through dialogue. The development of argumentative conversational agents for XAI is not just about making AI more explainable; it's about preserving and enhancing the fundamentally human capacity for reasoned discourse in an age of artificial intelligence.

The path forward requires continued investment in research that bridges technical capabilities with human needs, careful attention to the social and cultural dimensions of argumentative interaction, and a commitment to developing AI systems that enhance rather than diminish human reasoning capabilities. The stakes are high, but so is the potential reward: AI systems that can truly collaborate with humans in the pursuit of understanding, wisdom, and better decisions for all.

We don't need smarter machines—we need better conversations.

References and Further Information

Primary Research Sources:

“XAI meets LLMs: A Survey of the Relation between Explainable AI and Large Language Models” – Available at arxiv.org, provides comprehensive overview of the intersection between explainable AI and large language models, examining how conversational capabilities can enhance AI explanation systems.

“How Human-Centered Explainable AI Interfaces Are Designed and Evaluated” – Available at arxiv.org, examines user-centered approaches to XAI interface design and evaluation methodologies, highlighting the importance of interactive dialogue in explanation systems.

“Can formal argumentative reasoning enhance LLMs performances?” – Available at arxiv.org, explores the integration of formal argumentation frameworks with large language models, demonstrating how organised reasoning can improve AI explanation capabilities.

“Mind the Gap! Bridging Explainable Artificial Intelligence and Human-Computer Interaction” – Available at arxiv.org, addresses the critical gap between technical XAI capabilities and human communication needs, emphasising the importance of dialogue-based approaches.

“Explanation in artificial intelligence: Insights from the social sciences” – Available at ScienceDirect, provides foundational research on how humans naturally engage in explanatory dialogue and the implications for AI system design.

“Explainable Artificial Intelligence in education” – Available at ScienceDirect, examines the distinctive needs of educational applications for XAI and the potential for argumentative agents in learning contexts.

CLunch Archive, Penn NLP – Available at nlp.cis.upenn.edu, contains research presentations and discussions on conversational AI and natural language processing advances, including work on proactive conversational agents.

ACL 2025 Accepted Main Conference Papers – Available at 2025.aclweb.org, features cutting-edge research on collaborative criticism and refinement frameworks for multi-agent argumentative systems, including developments in TAGExplainer for narrating graph explanations.

Professional Resources:

The journal “Argument & Computation” publishes cutting-edge research on formal argumentation frameworks and their applications in AI systems, providing technical depth on computational argumentation methods.

Association for Computational Linguistics (ACL) proceedings contain numerous papers on conversational AI, dialogue systems, and natural language explanation generation, offering insights into the latest developments in argumentative AI.

International Conference on Autonomous Agents and Multiagent Systems (AAMAS) regularly features research on argumentative agents and their applications across various domains, including healthcare, finance, and education.

Association for the Advancement of Artificial Intelligence (AAAI) and European Association for Artificial Intelligence (EurAI) provide ongoing resources and research updates in explainable AI and conversational systems, including standards development for argumentative AI evaluation.

Technical Standards and Guidelines:

IEEE Standards Association develops technical standards for AI systems, including emerging guidelines for explainable AI and human-AI interaction that incorporate argumentative dialogue principles.

ISO/IEC JTC 1/SC 42 Artificial Intelligence committee works on international standards for AI systems, including frameworks for AI explanation and transparency that support argumentative approaches.

Partnership on AI publishes best practices and guidelines for responsible AI development, including recommendations for explainable AI systems that engage in meaningful dialogue with users.

Tim Green UK-based Systems Theorist & Independent Technology Writer

Tim explores the intersections of artificial intelligence, decentralised cognition, and posthuman ethics. His work, published at smarterarticles.co.uk, challenges dominant narratives of technological progress while proposing interdisciplinary frameworks for collective intelligence and digital stewardship.

His writing has been featured on Ground News and shared by independent researchers across both academic and technological communities.

ORCID: 0000-0002-0156-9795 Email: tim@smarterarticles.co.uk