The Double-Edged Revolution: How AI's Promise Meets Reality's Complex Challenges

In the rapidly evolving landscape of artificial intelligence, a fundamental tension has emerged that challenges our assumptions about technological progress and human capability. As AI systems become increasingly sophisticated and ubiquitous, society finds itself navigating uncharted territory where the promise of enhanced productivity collides with concerns about human agency, security, and the very nature of intelligence itself. From international security discussions at the United Nations to research laboratories exploring AI's role in scientific discovery, the technology is revealing itself to be far more complex—and consequential—than early adopters anticipated.

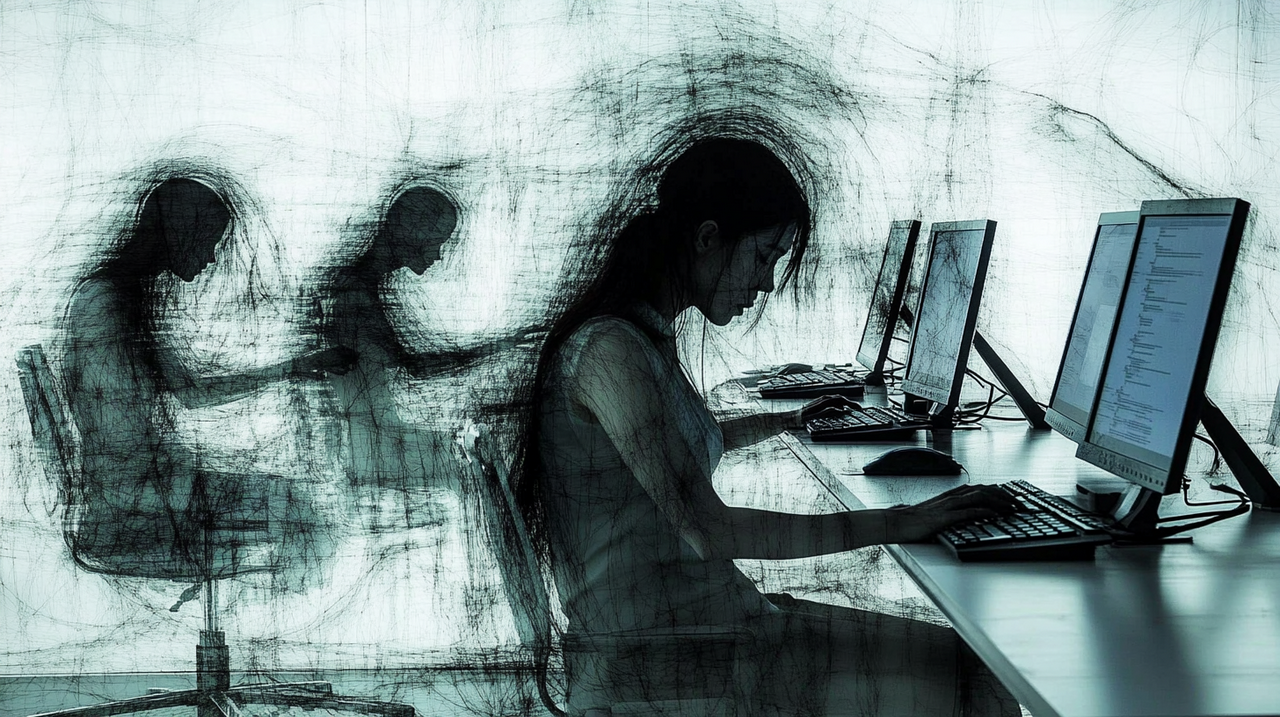

This complexity manifests in ways that extend far beyond technical specifications or performance benchmarks. AI is fundamentally altering how we work, think, and solve problems, creating what experts describe as a “double-edged sword” that can simultaneously enhance and diminish human capabilities. As industries rush to integrate AI into critical systems, from financial trading to scientific research, we're witnessing a collision between unprecedented opportunity and equally unprecedented uncertainty about the long-term implications of our choices.

The Cognitive Trade-Off

The most immediate impact of AI adoption reveals itself in the daily experience of users who find themselves caught between efficiency and engagement. Research into human-AI interaction has identified a fundamental paradox: while AI systems excel at automating difficult or unpleasant cognitive tasks, this convenience comes at the potential cost of skill atrophy and the loss of satisfaction derived from overcoming challenges.

This trade-off manifests across numerous domains. Students using AI writing assistants may produce better essays in less time, but they risk losing the critical thinking skills that develop through the struggle of composition. Financial analysts relying on AI for market analysis may process information more quickly, but they might gradually lose the intuitive understanding that comes from wrestling with complex data patterns themselves. The convenience of AI assistance creates what researchers describe as a “use it or lose it” dynamic for human cognitive abilities.

The phenomenon extends beyond individual skill development to affect how people approach problems fundamentally. When AI systems can provide instant answers to complex questions, users may become less inclined to engage in the deep, sustained thinking that leads to genuine understanding. This shift from active problem-solving to passive consumption of AI-generated solutions represents a profound change in how humans interact with information and challenges.

The implications become particularly concerning when considering the role of struggle and difficulty in human development and satisfaction. Psychological research has long established that overcoming challenges provides a sense of accomplishment and builds resilience. If AI systems remove too many of these challenges, they may inadvertently undermine sources of human fulfilment and growth. The technology designed to enhance human capabilities could paradoxically diminish them in subtle but significant ways.

This cognitive trade-off also affects professional development and expertise. In fields where AI can perform routine tasks, professionals may find their roles shifting towards higher-level oversight and decision-making. While this evolution can be positive, it also means that professionals may lose touch with the foundational skills and knowledge that inform good judgement. A radiologist who relies heavily on AI for image analysis may gradually lose the visual pattern recognition skills that allow them to catch subtle abnormalities that AI might miss.

The challenge is compounded by the fact that these effects may not be immediately apparent. The degradation of human skills and engagement often occurs gradually, making it difficult to recognise until significant capabilities have been lost. By the time organisations or individuals notice the problem, they may find themselves overly dependent on AI systems and unable to function effectively without them.

However, the picture is not entirely pessimistic. Some applications of AI can actually enhance human learning and development by providing personalised feedback, identifying knowledge gaps, and offering targeted practice opportunities. The key lies in designing AI systems and workflows that complement rather than replace human cognitive processes, preserving the elements of challenge and engagement that drive human growth while leveraging AI's capabilities to handle routine or overwhelming tasks.

The Security Imperative

While individual users grapple with AI's cognitive implications, international security experts are confronting far more consequential challenges. The United Nations Office for Disarmament Affairs has identified AI governance as a critical component of international security, recognising that the same technologies powering consumer applications could potentially be weaponised or misused in ways that threaten global stability.

This security perspective represents a significant shift from viewing AI primarily as a commercial technology to understanding it as a dual-use capability with profound implications for international relations and conflict. The concern is not merely theoretical—AI systems already demonstrate capabilities in pattern recognition, autonomous decision-making, and information processing that could be applied to military or malicious purposes with relatively minor modifications.

The challenge for international security lies in the civilian origins of most AI development. Unlike traditional weapons systems, which are typically developed within military or defence contexts subject to specific controls and oversight, AI technologies emerge from commercial research and development efforts that operate with minimal security constraints. This creates a situation where potentially dangerous capabilities can proliferate rapidly through normal commercial channels before their security implications are fully understood.

International bodies are particularly concerned about the potential for AI systems to be used in cyber attacks, disinformation campaigns, or autonomous weapons systems. The speed and scale at which AI can operate make it particularly suited to these applications, potentially allowing small groups or even individuals to cause damage that previously would have required significant resources and coordination. The democratisation of AI capabilities, while beneficial in many contexts, also democratises potential threats.

The response from the international security community has focused on developing new frameworks for AI governance that can address these dual-use concerns without stifling beneficial innovation. This involves bridging the gap between civilian-focused “responsible AI” communities and traditional arms control and non-proliferation experts, creating new forms of cooperation between groups that have historically operated in separate spheres.

However, the global nature of AI development complicates traditional approaches to security governance. AI research and development occur across multiple countries and jurisdictions, making it difficult to implement comprehensive controls or oversight mechanisms. The competitive dynamics of AI development also create incentives for countries and companies to prioritise capability advancement over security considerations, potentially leading to a race to deploy powerful AI systems without adequate safeguards.

The security implications extend beyond direct military applications to include concerns about AI's impact on economic stability, social cohesion, and democratic governance. AI systems that can manipulate information at scale, influence human behaviour, or disrupt critical infrastructure represent new categories of security threats that existing frameworks may be inadequate to address.

The Innovation Governance Challenge

The recognition of AI's security implications has led to the emergence of “responsible innovation” as a new paradigm for technology governance. This shift represents a fundamental departure from reactive regulation towards proactive risk management, embedding ethical considerations and security assessments throughout the entire AI system lifecycle. Rather than waiting to address problems after they occur, this approach seeks to anticipate and mitigate potential harms before they manifest, acknowledging that AI systems may pose novel risks that are difficult to predict using conventional approaches.

This proactive stance has gained particular urgency as international bodies recognise the interconnected nature of AI risks. The United Nations Office for Disarmament Affairs has positioned responsible innovation as essential for maintaining global stability, understanding that AI governance failures in one jurisdiction can rapidly affect others. The framework demands new methods for anticipating problems that may not have historical precedents, requiring governance mechanisms that can evolve alongside rapidly advancing capabilities.

The implementation of responsible innovation faces significant practical challenges. AI development often occurs at a pace that outstrips the ability of governance mechanisms to keep up, creating a situation where new capabilities emerge faster than appropriate oversight frameworks can be developed. The technical complexity of AI systems also makes it difficult for non-experts to understand the implications of new developments, complicating efforts to create effective governance structures.

Industry responses to responsible innovation initiatives have been mixed. Some companies have embraced the approach, investing in ethics teams, safety research, and stakeholder engagement processes. Others have been more resistant, arguing that excessive focus on potential risks could slow innovation and reduce competitiveness. This tension between innovation speed and responsible development represents one of the central challenges in AI governance.

The responsible innovation approach also requires new forms of collaboration between technologists, ethicists, policymakers, and affected communities. Traditional technology development processes often operate within relatively closed communities of experts, but responsible innovation demands broader participation and input from diverse stakeholders. This expanded participation can improve the quality of decision-making but also makes the development process more complex and time-consuming.

International coordination on responsible innovation presents additional challenges. Different countries and regions may have varying approaches to AI governance, creating potential conflicts or gaps in oversight. The global nature of AI development means that responsible innovation efforts need to be coordinated across jurisdictions to be effective, but achieving such coordination requires overcoming significant political and economic obstacles.

The responsible innovation framework also grapples with fundamental questions about the nature of technological progress and human agency. If AI systems can develop capabilities that their creators don't fully understand or anticipate, how can responsible innovation frameworks account for these emergent properties? The challenge is creating governance mechanisms that are flexible enough to address novel risks while being concrete enough to provide meaningful guidance for developers and deployers.

AI as Scientific Collaborator

Perhaps nowhere is AI's transformative potential more evident than in its evolving role within scientific research itself. The technology has moved far beyond simple data analysis to become what researchers describe as an active collaborator in the scientific process, generating hypotheses, designing experiments, and even drafting research papers. This evolution represents a fundamental shift in how scientific knowledge is created and validated.

In fields such as clinical psychology and suicide prevention research, AI systems are being used not merely to process existing data but to identify novel research questions and propose innovative methodological approaches. Researchers at SafeSide Prevention have embraced AI as a research partner, using it to generate new ideas and design studies that might not have emerged from traditional human-only research processes. This collaborative relationship between human researchers and AI systems is producing insights that neither could achieve independently, suggesting new possibilities for accelerating scientific discovery.

The integration of AI into scientific research offers significant advantages in terms of speed and scale. AI systems can process vast amounts of literature, identify patterns across multiple studies, and generate hypotheses at a pace that would be impossible for human researchers alone. This capability is particularly valuable in rapidly evolving fields where the volume of new research makes it difficult for individual scientists to stay current with all relevant developments.

However, this collaboration also raises important questions about the nature of scientific knowledge and discovery. If AI systems are generating hypotheses and designing experiments, what role do human creativity and intuition play in the scientific process? The concern is not that AI will replace human scientists, but that the nature of scientific work may change in ways that affect the quality and character of scientific knowledge.

The use of AI in scientific research also presents challenges for traditional peer review and validation processes. When AI systems contribute to hypothesis generation or experimental design, how should this contribution be evaluated and credited? The scientific community is still developing standards for assessing research that involves significant AI collaboration, creating uncertainty about how to maintain scientific rigour while embracing new technological capabilities.

There are also concerns about potential biases or limitations in AI-generated scientific insights. AI systems trained on existing literature may perpetuate historical biases or miss important perspectives that aren't well-represented in their training data. This could lead to research directions that reinforce existing paradigms rather than challenging them, potentially slowing scientific progress in subtle but significant ways.

The collaborative relationship between AI and human researchers is still evolving, with different fields developing different approaches to integration. Some research areas have embraced AI as a full partner in the research process, while others maintain more traditional divisions between human creativity and AI assistance. The optimal balance likely varies depending on the specific characteristics of different scientific domains.

The implications extend beyond individual research projects to affect the broader scientific enterprise. If AI can accelerate the pace of discovery, it might also accelerate the pace at which scientific knowledge becomes obsolete. This could create new pressures on researchers to keep up with rapidly evolving fields and might change the fundamental rhythms of scientific progress.

The Corporate Hype Machine

While serious researchers and policymakers grapple with AI's profound implications, much of the public discourse around AI is shaped by corporate marketing efforts that often oversimplify or misrepresent the technology's capabilities and limitations. The promotion of “AI-first” strategies as the latest business imperative creates a disconnect between the complex realities of AI implementation and the simplified narratives that drive adoption decisions.

This hype cycle follows familiar patterns from previous technology revolutions, where early enthusiasm and inflated expectations eventually give way to more realistic assessments of capabilities and limitations. However, the scale and speed of AI adoption mean that the consequences of this hype cycle may be more significant than previous examples. Organisations making major investments in AI based on unrealistic expectations may find themselves disappointed with results or unprepared for the challenges of implementation.

The corporate promotion of AI often focuses on dramatic productivity gains and competitive advantages while downplaying the complexity of successful implementation. Real-world AI deployment typically requires significant changes to workflows, extensive training for users, and ongoing maintenance and oversight. The gap between marketing promises and implementation realities can lead to failed projects and disillusionment with the technology.

The hype around AI also tends to obscure important questions about the appropriate use of the technology. Not every problem requires an AI solution, and in some cases, simpler approaches may be more effective and reliable. The pressure to adopt AI for its own sake, rather than as a solution to specific problems, can lead to inefficient resource allocation and suboptimal outcomes.

The disconnect between corporate hype and serious governance discussions is particularly striking. While technology executives promote AI as a transformative business tool, international security experts simultaneously engage in complex discussions about managing existential risks from the same technology. This parallel discourse creates confusion about AI's true capabilities and appropriate applications.

The media's role in amplifying corporate AI narratives also contributes to public misunderstanding about the technology. Sensationalised coverage of AI breakthroughs often lacks the context needed to understand limitations and risks, creating unrealistic expectations about what AI can accomplish. This misunderstanding can lead to both excessive enthusiasm and unwarranted fear, neither of which supports informed decision-making about AI adoption and governance.

The current wave of “AI-first” mandates from technology executives bears striking resemblance to previous corporate fads, from the dot-com boom's obsession with internet strategies to more recent pushes for “return to office” policies. These top-down directives often reflect executive anxiety about being left behind rather than careful analysis of actual business needs or technological capabilities.

The Human Oversight Imperative

Regardless of AI's capabilities or limitations, the research consistently points to the critical importance of maintaining meaningful human oversight in AI systems, particularly in high-stakes applications. This oversight goes beyond simple monitoring to encompass active engagement with AI outputs, verification of results, and the application of human judgement to determine appropriate actions.

The quality of human oversight directly affects the safety and effectiveness of AI systems. Users who understand how to interact effectively with AI, who know when to trust or question AI outputs, and who can provide appropriate context and validation are more likely to achieve positive outcomes. Conversely, users who passively accept AI recommendations without sufficient scrutiny may miss errors or inappropriate suggestions.

This requirement for human oversight creates both opportunities and challenges for AI deployment. On the positive side, it enables AI systems to serve as powerful tools for augmenting human capabilities rather than replacing human judgement entirely. The combination of AI's processing power and human wisdom can potentially achieve better results than either could accomplish alone.

However, the need for human oversight also limits the potential efficiency gains from AI adoption. If every AI output requires human review and validation, then the technology cannot deliver the dramatic productivity improvements that many adopters hope for. This creates a tension between safety and efficiency that organisations must navigate carefully.

The psychological aspects of human-AI interaction also affect the quality of oversight. Research suggests that people tend to over-rely on automated systems, particularly when those systems are presented as intelligent or sophisticated. This “automation bias” can lead users to accept AI outputs without sufficient scrutiny, potentially missing errors or inappropriate recommendations.

The challenge becomes more complex as AI systems become more sophisticated and convincing in their outputs. As AI-generated content becomes increasingly difficult to distinguish from human-generated content, users may find it harder to maintain appropriate scepticism and oversight. This evolution requires new approaches to training and education that help people understand how to work effectively with increasingly capable AI systems.

Professional users of AI systems face particular challenges in maintaining appropriate oversight. In fast-paced environments where quick decisions are required, the pressure to act on AI recommendations without thorough verification can conflict with safety requirements. The competitive advantages that AI provides may be partially offset by the time and resources required to ensure that recommendations are appropriate and safe.

The development of effective human oversight mechanisms requires understanding both the capabilities and limitations of specific AI systems. Users need to know what types of tasks AI systems handle well, where they are likely to make errors, and what kinds of human input are most valuable for improving outcomes. This knowledge must be continuously updated as AI systems evolve and improve.

Training programmes for AI users must go beyond basic technical instruction to include critical thinking skills, bias recognition, and decision-making frameworks that help users maintain appropriate levels of scepticism and engagement. The goal is not to make users distrust AI systems, but to help them develop the judgement needed to use these tools effectively and safely.

The Black Box Dilemma

One of the most significant challenges in ensuring appropriate human oversight of AI systems is their fundamental opacity. Modern AI systems, particularly large language models, operate as “black boxes” whose internal decision-making processes are largely mysterious, even to their creators. This opacity makes it extremely difficult to understand why AI systems produce particular outputs or to predict when they might behave unexpectedly.

Unlike traditional software, where programmers can examine code line by line to understand how a programme works, AI systems contain billions or trillions of parameters that interact in complex ways that defy human comprehension. The resulting systems can exhibit sophisticated behaviours and capabilities, but the mechanisms underlying these behaviours remain largely opaque.

This opacity creates significant challenges for oversight and accountability. How can users appropriately evaluate AI outputs if they don't understand how those outputs were generated? How can organisations be held responsible for AI decisions if the decision-making process is fundamentally incomprehensible? These questions become particularly pressing when AI systems are deployed in high-stakes applications where errors could have severe consequences.

The black box problem also complicates efforts to improve AI systems or address problems when they occur. Traditional debugging approaches rely on being able to trace problems back to their source and implement targeted fixes. But if an AI system produces an inappropriate output, it may be impossible to determine why it made that choice or how to prevent similar problems in the future.

Some researchers are working on developing “explainable AI” techniques that could make AI systems more transparent and interpretable. These approaches aim to create AI systems that can provide clear explanations for their decisions, making it easier to understand and evaluate their outputs. However, there's often a trade-off between AI performance and explainability—the most powerful AI systems tend to be the most opaque.

The black box problem extends beyond technical challenges to create difficulties for regulation and oversight. How can regulators evaluate the safety of AI systems they can't fully understand? How can professional standards be developed for technologies whose operation is fundamentally mysterious? These challenges require new approaches to governance that can address opacity while still providing meaningful oversight.

The opacity of AI systems also affects public trust and acceptance. Users and stakeholders may be reluctant to rely on technologies they don't understand, particularly when those technologies could affect important decisions or outcomes. This trust deficit could slow AI adoption and limit the technology's potential benefits, but it may also serve as a necessary brake on reckless deployment of insufficiently understood systems.

The challenge is particularly acute in domains where explainability has traditionally been important for professional practice and legal compliance. Medical diagnosis, legal reasoning, and financial decision-making all rely on the ability to trace and justify decisions. The introduction of opaque AI systems into these domains requires new frameworks for maintaining accountability while leveraging AI capabilities.

Research into AI interpretability continues to advance, with new techniques emerging for understanding how AI systems process information and make decisions. However, these techniques often provide only partial insights into AI behaviour, and it remains unclear whether truly comprehensive understanding of complex AI systems is achievable or even necessary for safe deployment.

Industry Adaptation and Response

The recognition of AI's complexities and risks has prompted varied responses across different sectors of the technology industry and beyond. Some organisations have invested heavily in AI safety research and responsible development practices, while others have taken more cautious approaches to deployment. The diversity of responses reflects the uncertainty surrounding both the magnitude of AI's benefits and the severity of its potential risks.

Major technology companies have adopted different strategies for addressing AI safety and governance concerns. Some have established dedicated ethics teams, invested in safety research, and implemented extensive testing protocols before deploying new AI capabilities. These companies argue that proactive safety measures are essential for maintaining public trust and ensuring the long-term viability of AI technology.

Other organisations have been more sceptical of extensive safety measures, arguing that excessive caution could slow innovation and reduce competitiveness. These companies often point to the potential benefits of AI technology and argue that the risks are manageable through existing oversight mechanisms. The tension between these approaches reflects broader disagreements about the appropriate balance between innovation and safety.

The financial sector has been particularly aggressive in adopting AI technologies, driven by the potential for significant competitive advantages in trading, risk assessment, and customer service. However, this rapid adoption has also raised concerns about systemic risks if AI systems behave unexpectedly or if multiple institutions experience similar problems simultaneously. Financial regulators are beginning to develop new frameworks for overseeing AI use in systemically important institutions.

Healthcare organisations face unique challenges in AI adoption due to the life-and-death nature of medical decisions. While AI has shown tremendous promise in medical diagnosis and treatment planning, healthcare providers must balance the potential benefits against the risks of AI errors or inappropriate recommendations. The development of appropriate oversight and validation procedures for medical AI remains an active area of research and policy development.

Educational institutions are grappling with how to integrate AI tools while maintaining academic integrity and educational value. The availability of AI systems that can write essays, solve problems, and answer questions has forced educators to reconsider traditional approaches to assessment and learning. Some institutions have embraced AI as a learning tool, while others have implemented restrictions or bans on AI use.

The regulatory response to AI development has been fragmented and often reactive rather than proactive. Different jurisdictions are developing different approaches to AI governance, creating a patchwork of regulations that may be difficult for global companies to navigate. The European Union has been particularly active in developing comprehensive AI regulations, while other regions have taken more hands-off approaches.

Professional services firms are finding that AI adoption requires significant changes to traditional business models and client relationships. Law firms using AI for document review and legal research must develop new quality assurance processes and client communication strategies. Consulting firms leveraging AI for analysis and recommendations face questions about how to maintain the human expertise and judgement that clients value.

The technology sector itself is experiencing internal tensions as AI capabilities advance. Companies that built their competitive advantages on human expertise and creativity are finding that AI can replicate many of these capabilities, forcing them to reconsider their value propositions and business strategies. This disruption is happening within the technology industry even as it spreads to other sectors.

Future Implications and Uncertainties

The trajectory of AI development and deployment remains highly uncertain, with different experts offering dramatically different predictions about the technology's future impact. Some envision a future where AI systems become increasingly capable and autonomous, potentially achieving or exceeding human-level intelligence across a broad range of tasks. Others argue that current AI approaches have fundamental limitations that will prevent such dramatic advances.

The uncertainty extends to questions about AI's impact on employment, economic inequality, and social structures. While some jobs may be automated away by AI systems, new types of work may also emerge that require human-AI collaboration. The net effect on employment and economic opportunity remains unclear and will likely vary significantly across different sectors and regions.

The geopolitical implications of AI development are also uncertain but potentially significant. Countries that achieve advantages in AI capabilities may gain substantial economic and military benefits, potentially reshaping global power dynamics. The competition for AI leadership could drive increased investment in research and development but might also lead to corners being cut on safety and governance.

The long-term relationship between humans and AI systems remains an open question. Will AI remain a tool that augments human capabilities, or will it evolve into something more autonomous and independent? The answer may depend on technological developments that are difficult to predict, as well as conscious choices about how AI systems are designed and deployed.

The governance challenges surrounding AI are likely to become more complex as the technology advances. Current approaches to AI regulation and oversight may prove inadequate for managing more capable systems, requiring new frameworks and institutions. The international coordination required for effective AI governance may be difficult to achieve given competing national interests and different regulatory philosophies.

The emergence of AI capabilities that exceed human performance in specific domains raises profound questions about the nature of intelligence, consciousness, and human uniqueness. These philosophical and even theological questions may become increasingly practical as AI systems become more sophisticated and autonomous. Society may need to grapple with fundamental questions about the relationship between artificial and human intelligence.

The economic implications of widespread AI adoption could be transformative, potentially leading to significant increases in productivity and wealth creation. However, the distribution of these benefits is likely to be uneven, potentially exacerbating existing inequalities or creating new forms of economic stratification. The challenge will be ensuring that AI's benefits are broadly shared rather than concentrated among a small number of individuals or organisations.

Environmental considerations may also play an increasingly important role in AI development and deployment. The computational requirements of advanced AI systems are substantial and growing, leading to significant energy consumption and carbon emissions. Balancing AI's potential benefits against its environmental costs will require careful consideration and potentially new approaches to AI development that prioritise efficiency and sustainability.

Navigating the Double-Edged Future

The emergence of AI as a transformative technology presents society with choices that will shape the future of human capability, economic opportunity, and global security. The research and analysis consistently point to AI as a double-edged tool that can simultaneously enhance and diminish human potential, depending on how it is developed, deployed, and governed.

The path forward requires careful navigation between competing priorities and values. Maximising AI's benefits while minimising its risks demands new approaches to technology development that prioritise safety and human agency alongside capability and efficiency. This balance cannot be achieved through technology alone but requires conscious choices about how AI systems are designed, implemented, and overseen.

The responsibility for shaping AI's impact extends beyond technology companies to include policymakers, educators, employers, and individual users. Each stakeholder group has a role to play in ensuring that AI development serves human flourishing rather than undermining it. This distributed responsibility requires new forms of collaboration and coordination across traditional boundaries.

The international dimension of AI governance presents particular challenges that require unprecedented cooperation between nations with different values, interests, and regulatory approaches. The global nature of AI development means that problems in one country can quickly affect others, making international coordination essential for effective governance.

The ultimate impact of AI will depend not just on technological capabilities but on the wisdom and values that guide its development and use. The choices made today about AI safety, governance, and deployment will determine whether the technology becomes a tool for human empowerment or a source of new risks and inequalities. The window for shaping these outcomes remains open, but it may not remain so indefinitely.

The story of AI's impact on society is still being written, with each new development adding complexity to an already intricate narrative. The challenge is ensuring that this story has a positive ending—one where AI enhances rather than diminishes human potential, where its benefits are broadly shared rather than concentrated among a few, and where its risks are managed rather than ignored. Achieving this outcome will require the best of human wisdom, cooperation, and foresight applied to one of the most consequential technologies ever developed.

As we stand at this inflection point, the choices we make about AI will echo through generations. The question is not whether we can create intelligence that surpasses our own, but whether we can do so while preserving what makes us most human. The answer lies not in the code we write or the models we train, but in the wisdom we bring to wielding power beyond our full comprehension.

References and Further Information

Primary Sources: – Roose, K. “Why Even Try if You Have A.I.?” The New Yorker, 2024. Available at: www.newyorker.com – Dash, A. “Don't call it a Substack.” Anil Dash, 2024. Available at: www.anildash.com – United Nations Office for Disarmament Affairs. “Blog – UNODA.” Available at: disarmament.unoda.org – SafeSide Prevention. “AI Scientists and the Humans Who Love them.” Available at: safesideprevention.com – Ehrman, B. “A Revelatory Moment about 'God'.” The Bart Ehrman Blog, 2024. Available at: ehrmanblog.org

Technical and Research Context: – Russell, S. and Norvig, P. Artificial Intelligence: A Modern Approach, 4th Edition. Pearson, 2020. – Amodei, D. et al. “Concrete Problems in AI Safety.” arXiv preprint arXiv:1606.06565, 2016. – Lundberg, S. M. and Lee, S. I. “A unified approach to interpreting model predictions.” Advances in Neural Information Processing Systems, 2017.

Policy and Governance: – European Commission. “Regulation (EU) 2024/1689 of the European Parliament and of the Council of 13 June 2024 laying down harmonised rules on artificial intelligence (Artificial Intelligence Act).” Official Journal of the European Union, 2024. – Partnership on AI. “About Partnership on AI.” Available at: www.partnershiponai.org – United Nations Office for Disarmament Affairs. “Responsible Innovation in the Context of Conventional Weapons.” UNODA Occasional Papers, 2024.

Human-AI Interaction Research: – Parasuraman, R. and Riley, V. “Humans and Automation: Use, Misuse, Disuse, Abuse.” Human Factors, vol. 39, no. 2, 1997. – Lee, J. D. and See, K. A. “Trust in Automation: Designing for Appropriate Reliance.” Human Factors, vol. 46, no. 1, 2004. – Amershi, S. et al. “Guidelines for Human-AI Interaction.” Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 2019. – Bansal, G. et al. “Does the Whole Exceed its Parts? The Effect of AI Explanations on Complementary Team Performance.” Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, 2021.

AI Safety and Alignment: – Christiano, P. et al. “Deep Reinforcement Learning from Human Preferences.” Advances in Neural Information Processing Systems, 2017. – Irving, G. et al. “AI Safety via Debate.” arXiv preprint arXiv:1805.00899, 2018.

Economic and Social Impact: – Brynjolfsson, E. and McAfee, A. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. W. W. Norton & Company, 2014. – Acemoglu, D. and Restrepo, P. “The Race between Man and Machine: Implications of Technology for Growth, Factor Shares, and Employment.” American Economic Review, vol. 108, no. 6, 2018. – World Economic Forum. “The Future of Jobs Report 2023.” Available at: www.weforum.org – Autor, D. H. “Why Are There Still So Many Jobs? The History and Future of Workplace Automation.” Journal of Economic Perspectives, vol. 29, no. 3, 2015.

Further Reading: – Bostrom, N. Superintelligence: Paths, Dangers, Strategies. Oxford University Press, 2014. – Christian, B. The Alignment Problem: Machine Learning and Human Values. W. W. Norton & Company, 2020. – Mitchell, M. Artificial Intelligence: A Guide for Thinking Humans. Farrar, Straus and Giroux, 2019. – O'Neil, C. Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. Crown Publishing Group, 2016. – Zuboff, S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. PublicAffairs, 2019. – Tegmark, M. Life 3.0: Being Human in the Age of Artificial Intelligence. Knopf, 2017. – Russell, S. Human Compatible: Artificial Intelligence and the Problem of Control. Viking, 2019.

Tim Green UK-based Systems Theorist & Independent Technology Writer

Tim explores the intersections of artificial intelligence, decentralised cognition, and posthuman ethics. His work, published at smarterarticles.co.uk, challenges dominant narratives of technological progress while proposing interdisciplinary frameworks for collective intelligence and digital stewardship.

His writing has been featured on Ground News and shared by independent researchers across both academic and technological communities.

ORCID: 0000-0002-0156-9795 Email: tim@smarterarticles.co.uk

#HumanInTheLoop #AIComplexity #EthicalChallenges #TechnologicalParadox