The Invisible Housemate: How AI Agents Are Rewriting the Rules of Home

Your home is learning. Every time you adjust the thermostat, ask Alexa to play music, or let Google Assistant order groceries, you're training an invisible housemate that never sleeps, never forgets, and increasingly makes decisions on your behalf. The smart home revolution promised convenience, but it's delivering something far more complex: a fundamental transformation of domestic space, family relationships, and personal autonomy.

The statistics paint a striking picture. The global AI in smart home technology market reached $12.7 billion in 2023 and is predicted to soar to $57.3 billion by 2031, growing at 21.3 per cent annually. By 2024, more than 375 million AI-centric households exist worldwide, with smart speaker users expected to reach 400 million. These aren't just gadgets; they're autonomous agents embedding themselves into the fabric of family life.

But as these AI systems gain control over everything from lighting to security, they're raising urgent questions about who really runs our homes. Are we directing our domestic environments, or are algorithms quietly nudging our behaviour in ways we barely notice? And what happens to family dynamics when an AI assistant becomes the household's de facto decision-maker, mediator, and memory-keeper?

When Your House Has an Opinion

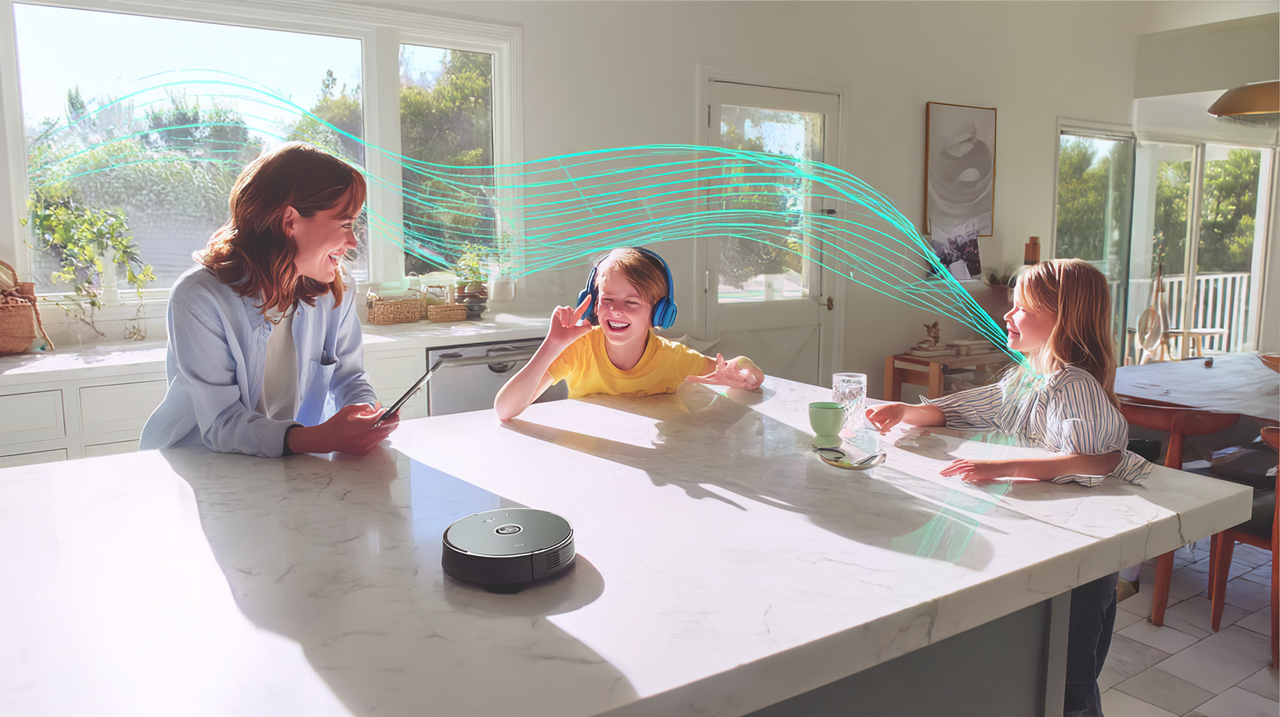

The smart home of 2025 isn't passive. Today's AI-powered residences anticipate needs, learn preferences, and make autonomous decisions. Amazon's Alexa Plus, powered by generative AI and free with Prime, represents this evolution. More than 600 million Alexa devices worldwide understand context, recognise individual family members, and create automations through conversation.

Google's Gemini assistant and Apple's revamped Siri follow similar paths. At the 2024 Consumer Electronics Show, LG Electronics unveiled an AI agent using robotics that moves through homes, learns routines, and carries on sophisticated conversations. These aren't prototypes; they're commercial products shipping today.

The technical capabilities have expanded dramatically. Version 1.4 of the Matter protocol, released in November 2024, introduced support for batteries, solar systems, water heaters, and heat pumps. Matter, founded by Amazon, Apple, Google, and the Connectivity Standards Alliance, aims to solve the interoperability nightmare plaguing smart homes for years. The protocol enables devices from different manufacturers to communicate seamlessly, creating truly integrated home environments rather than competing ecosystems locked behind proprietary walls.

This interoperability accelerates a crucial shift from individual smart devices to cohesive AI agents managing entire households. Voice assistants represented 33.04 per cent of the AI home automation market in 2024, valued at $6.77 billion, projected as the fastest-growing segment at 34.49 per cent annually through 2029. The transformation isn't about market share; it's about how these systems reshape the intimate spaces where families eat, sleep, argue, and reconcile.

The New Household Dynamic: Who's Really in Charge?

When Brandon McDaniel and colleagues at the Parkview Mirro Center for Research and Innovation studied families' relationships with conversational AI agents, they discovered something unexpected: attachment-like behaviours. Their 2025 research in Family Relations found that approximately half of participants reported daily digital assistant use, with many displaying moderate attachment-like behaviour towards their AI companions.

“As conversational AI becomes part of people's environments, their attachment system may become activated,” McDaniel's team wrote. While future research must determine whether these represent true human-AI attachment, the implications for family dynamics are already visible. Higher frequency of use correlated with higher attachment-like behaviour and parents' perceptions of both positive and negative impacts.

When children develop attachment-like relationships with Alexa or Google Assistant, what happens to parent-child dynamics? A study of 305 Dutch parents with children aged three to eight found motivation for using voice assistants stemmed primarily from enjoyment, especially when used together with their children. However, parents perceived that dependence on AI increased risks to safety and privacy.

Family dynamics grow increasingly complex when AI agents assume specific household roles. A 2025 commentary in Family Relations explored three distinct personas: home maintainer, guardian, or companion. Each reshapes family relationships differently.

As a home maintainer, AI systems manage thermostats, lighting, and appliances, theoretically reducing household management burdens. But this seemingly neutral function can shift the gender division of chores and introduce new forms of control through digital housekeeping. Brookings Institution research highlights this paradox: nearly 40 per cent of domestic chores could be automated within a decade, yet history suggests caution. Washing machines and dishwashers, introduced as labour-saving devices over a century ago, haven't eliminated the gender gap in household chores. These tools reduced time on specific tasks but shifted rather than alleviated the broader burden of care work.

The guardian role presents even thornier ethical terrain. AI monitoring household safety reshapes intimate surveillance practices within families. When cameras track children's movements, sensors report teenagers' comings and goings, algorithms analyse conversations for signs of distress, traditional boundaries blur. Parents gain unprecedented monitoring capabilities, but at what cost to children's autonomy and trust?

As a companion, domestic AI shapes or is shaped by existing household dynamics in ways researchers are only beginning to understand. When families turn to AI for entertainment, information, and even emotional support, these systems become active participants in family life rather than passive tools. The question isn't whether this is happening; it's what it means for human relationships when algorithms mediate family interactions.

The Privacy Paradox: Convenience Versus Control

The smart home operates on a fundamental exchange: convenience for data. Every interaction generates behavioural information flowing to corporate servers, where it's analysed, packaged, and often sold to third parties. This data collection apparatus represents what Harvard professor Shoshana Zuboff termed “surveillance capitalism” in her influential work.

Zuboff defines it as “the unilateral claiming of private human experience as free raw material for translation into behavioural data, which are then computed and packaged as prediction products and sold into behavioural futures markets.” Smart home devices epitomise this model perfectly. ProPublica reported breathing machines for sleep apnea secretly send usage data to health insurers, where the information justifies reduced payments. If medical devices engage in such covert collection, what might smart home assistants be sharing?

The technical reality reinforces these concerns. A 2021 YouGov survey found 60 to 70 per cent of UK adults believe their smartphones and smart speakers listen to conversations unprompted. A PwC study found 40 per cent of voice assistant users still worry about what happens to their voice data. These aren't baseless fears; they reflect the opacity of data collection practices in smart home ecosystems.

Academic research confirms the privacy vulnerabilities. An international team led by IMDEA Networks and Northeastern University found opaque Internet of Things devices inadvertently expose sensitive data within local networks: device names, unique identifiers, household geolocation. Companies can harvest this information without user awareness. Among a control group, 91 per cent experienced unwanted Alexa recordings, and 29.2 per cent reported some contained sensitive information.

The security threats extend beyond passive data collection. Security researcher Matt Kunze discovered a flaw in Google Home speakers allowing hackers to install backdoor accounts, enabling remote control and transforming the device into a listening device. Google awarded Kunze $107,500 for responsibly disclosing the threat. In 2019, researchers demonstrated hackers could control these devices from 360 feet using a laser pointer. These vulnerabilities aren't theoretical; they're actively exploited attack vectors in homes worldwide.

Yet users continue adopting smart home technology at accelerating rates. Researchers describe this phenomenon as “privacy resignation,” a state where users understand risks but feel powerless to resist convenience and social pressure to participate in smart home ecosystems. Studies show users express few initial privacy concerns, but their rationalisations indicate incomplete understanding of privacy risks and complicated trust relationships with device manufacturers.

Users' mental models about smart home assistants are often limited to their household and the vendor, even when using third-party skills that access their data. This incomplete understanding leaves users vulnerable to privacy violations they don't anticipate and can't prevent using existing tools.

The Autonomy Question: Who Decides?

Personal autonomy sits at the heart of the smart home dilemma. The concept encompasses the freedom to make meaningful choices about one's life without undue external influence. AI home agents challenge this freedom in subtle but profound ways.

Consider the algorithmic nudge. Smart homes don't merely respond to preferences; they shape them. When your thermostat learns your schedule and adjusts automatically, you're ceding thermal control to an algorithm. When your smart refrigerator suggests recipes based on inventory analysis, it's influencing your meal decisions. When lighting creates ambience based on time and detected activities, it's architecting your home environment according to its programming, not necessarily your conscious preferences.

These micro-decisions accumulate into macro-influence. Researchers describe this phenomenon as “hypernudging,” a dynamic, highly personalised, opaque form of regulating choice architectures through big data techniques. Unlike traditional nudges, which are relatively transparent and static, hypernudges adapt in real-time through continuous data collection and analysis, making them harder to recognise and resist.

Manipulation concerns intensify when considering how AI agents learn and evolve. Machine learning systems optimise for engagement and continued use, not necessarily for users' wellbeing. When a voice assistant learns certain response types keep you interacting longer, it might prioritise those patterns even if they don't best serve your interests. System goals and your goals can diverge without your awareness.

Family decision-making processes shift under AI influence. A study exploring families' visions of AI agents for household safety found participants wanted to communicate and make final decisions themselves, though acknowledging agents might offer convenient or less judgemental channels for discussing safety issues. Children specifically expressed a desire for autonomy to first discuss safety issues with AI, then discuss them with parents using their own terms.

This finding reveals the delicate balance families seek: using AI as a tool without ceding ultimate authority to algorithms. But maintaining this balance requires technical literacy, vigilance, and control mechanisms that current smart home systems rarely provide.

Autonomy challenges magnify for vulnerable populations. Older adults and individuals with disabilities often benefit tremendously from AI-assisted living, gaining independence they couldn't achieve otherwise. Smart home technologies enable older adults to live autonomously for extended periods, with systems swiftly detecting emergencies and deviations in behaviour patterns. Yet researchers emphasise these systems must enhance rather than diminish user autonomy, supporting independence while respecting decision-making abilities.

A 2025 study published in Frontiers in Digital Health argued AI surveillance in elder care must “begin with a moral commitment to human dignity rather than prioritising safety and efficiency over agency and autonomy.” The research found older adults' risk perceptions and tolerance regarding independent living often differ from family and professional caregivers' perspectives. One study found adult children preferred in-home monitoring technologies more than their elderly parents, highlighting how AI systems can become tools for imposing others' preferences rather than supporting the user's autonomy.

Research reveals ongoing monitoring, even when aimed at protection, produces feelings of anxiety, helplessness, or withdrawal from ordinary activities among older adults. The technologies designed to enable independence can paradoxically undermine it, transforming homes from private sanctuaries into surveilled environments where residents feel constantly watched and judged.

The Erosion of Private Domestic Space

The concept of home as a private sanctuary runs deep in Western culture and law. Courts have long recognised heightened expectations of privacy within domestic spaces, providing legal protections that don't apply to public venues. Smart home technology challenges these boundaries, turning private spaces into data-generating environments where every action becomes observable, recordable, and analysable.

Alexander Orlowski and Wulf Loh of the University of Tuebingen's International Center for Ethics in the Sciences and Humanities examined this transformation in their 2025 paper published in AI & Society. They argue smart home applications operate within “a space both morally and legally particularly protected and characterised by an implicit expectation of privacy from the user's perspective.”

Yet current regulatory efforts haven't kept pace with smart home environments. Collection and processing of user data in these spaces lack transparency and control. Users often remain unaware of the extent to which their data is being gathered, stored, and potentially shared with third parties. The home, traditionally a space shielded from external observation, becomes permeable when saturated with networked sensors and AI agents reporting to external servers.

This permeability affects family relationships and individual behaviour in ways both obvious and subtle. When family members know conversations might trigger smart speaker recordings, they self-censor. When teenagers realise their movements are tracked by smart home sensors, their sense of privacy and autonomy diminishes. When parents can monitor children's every activity through networked devices, traditional developmental processes of testing boundaries and building independence face new obstacles.

Surveillance extends beyond intentional monitoring. Smart home devices communicate constantly with manufacturers' servers, generating continuous data streams about household activities, schedules, and preferences. This ambient surveillance normalises the idea that homes aren't truly private spaces but rather nodes in vast corporate data collection networks.

Research on security and privacy perspectives of people living in shared home environments reveals additional complications. Housemates, family members, and domestic workers may have conflicting privacy preferences and unequal power to enforce them. When one person installs a smart speaker with always-listening microphones, everyone in the household becomes subject to potential recording regardless of their consent. The collective nature of household privacy creates ethical dilemmas current smart home systems aren't designed to address.

The architectural and spatial experience of home shifts as well. Homes have traditionally provided different zones of privacy, from public living spaces to intimate bedrooms. Smart home sensors blur these distinctions, creating continuous surveillance that erases gradients of privacy. The bedroom monitored by a smart speaker isn't fully private; the bathroom with a voice-activated assistant isn't truly solitary. The psychological experience of home as refuge diminishes when you can't be certain you're unobserved.

Children Growing Up With AI Companions

Perhaps nowhere are the implications more profound than in childhood development. Today's children are the first generation growing up with AI agents as household fixtures, encountering Alexa and Google Assistant as fundamental features of their environment from birth.

Research on virtual assistants in family homes reveals these devices are particularly prevalent in households with young children. A Dutch study of families with children aged three to eight found families differ mainly in parents' digital literacy skills, frequency of voice assistant use, trust in technology, and preferred degree of child media mediation.

But what are children learning from these interactions? Voice-activated virtual assistants provide quick answers to children's questions, potentially reducing the burden on parents to be constant sources of information. They can engage children in educational conversations and provide entertainment. Yet they also model specific interaction patterns and relationship dynamics that may shape children's social development in ways researchers are only beginning to understand.

When children form attachment-like relationships with AI assistants, as McDaniel's research suggests is happening, what does this mean for their developing sense of relationships, authority, and trust? Unlike human caregivers, AI assistants respond instantly, never lose patience, and don't require reciprocal care. They provide information without the uncertainty and nuance that characterise human knowledge. They offer entertainment without the negotiation that comes with asking family members to share time and attention.

These differences might seem beneficial on the surface. Children get immediate answers and entertainment without burdening busy parents. But developmental psychologists emphasise the importance of frustration tolerance, delayed gratification, and learning to navigate imperfect human relationships. When AI assistants provide frictionless interactions, children may miss crucial developmental experiences that shape emotional intelligence and social competence.

The data collection dimension adds another layer of concern. Children interacting with smart home devices generate valuable behavioural data that companies use to refine their products and potentially target marketing. Parents often lack full visibility into what data is collected, how it's analysed, and who has access to it. The global smart baby monitor market alone was valued at approximately $1.2 billion in 2023, with projections to reach over $2.5 billion by 2030, while the broader “AI parenting” market could reach $20 billion within the next decade. These figures represent significant commercial interest in monitoring and analysing children's behaviour.

Research on technology interference or “technoference” in parent-child relationships reveals additional concerns. A cross-sectional study found parents reported an average of 3.03 devices interfered daily with their interactions with children. Almost two-thirds of parents agreed they were worried about the impact of their mobile device use on their children and believed a computer-assisted coach would help them notice more quickly when device use interferes with caregiving.

The irony is striking: parents turn to AI assistants partly to reduce technology interference, yet these assistants represent additional technology mediating family relationships. The solution becomes part of the problem, creating recursive patterns where technology addresses issues created by technology, each iteration generating more data and deeper system integration.

Proposed Solutions and Alternative Futures

Recognition of smart home privacy and autonomy challenges has sparked various technical and regulatory responses. Some researchers and companies are developing privacy-preserving technologies that could enable smart home functionality without comprehensive surveillance.

Orlowski and Loh's proposed privacy smart home meta-assistant represents one technical approach. This system would provide real-time transparency, displaying which devices are collecting data, what type of data is being gathered, and where it's being sent. It would enable selective data blocking, allowing users to disable specific sensors or functions without turning off entire devices. The meta-assistant concept aims to shift control from manufacturers to users, creating genuine data autonomy within smart home environments.

Researchers at the University of Michigan developed PrivacyMic, which uses ultrasonic sound at frequencies above human hearing range to enable smart home functionality without eavesdropping on audible conversations. This technical solution addresses one of the most sensitive aspects of smart home surveillance: always-listening microphones in intimate spaces.

For elder care applications, researchers are developing camera-based monitoring systems that address dual objectives of privacy and safety using AI-driven techniques for real-time subject anonymisation. Rather than traditional pixelisation or blurring, these systems replace subjects with two-dimensional avatars. Such avatar-based systems can reduce feelings of intrusion and discomfort associated with constant monitoring, thereby aligning with elderly people's expectations for dignity and independence.

A “Dignity-First” framework proposed by researchers includes informed and ongoing consent as a dynamic process, with regular check-in points and user-friendly settings enabling users or caregivers to modify permissions. This approach recognises that consent isn't a one-time event but an ongoing negotiation that must adapt as circumstances and preferences change.

Regulatory approaches are evolving as well, though they lag behind technological development. Data protection frameworks like the European Union's General Data Protection Regulation establish principles of consent, transparency, and user control that theoretically apply to smart home devices. However, enforcement remains challenging, and many users struggle to exercise their nominal rights due to complex interfaces and opaque data practices.

The Matter protocol's success in establishing interoperability standards demonstrates that industry coordination on technical specifications is achievable. Similar coordination on privacy and security standards could establish baseline protections across smart home ecosystems. The Connectivity Standards Alliance could expand its mandate beyond device communication to encompass privacy protocols, creating industry-wide expectations for data minimisation, transparency, and user control.

Consumer education represents another crucial component. Research consistently shows users have incomplete mental models of smart home privacy risks and limited understanding of how data flows through these systems. Educational initiatives could help users make more informed decisions about which devices to adopt, how to configure them, and what privacy trade-offs they're accepting.

Some families are developing their own strategies for managing AI agents in household contexts. These include establishing device-free zones or times, having explicit family conversations about AI use and privacy expectations, teaching children to question and verify AI-provided information, and regularly reviewing and adjusting smart home configurations and permissions.

The Path Forward: Reclaiming Domestic Agency

The smart home revolution isn't reversible, nor should it necessarily be. AI agents offer genuine benefits for household management, accessibility, energy efficiency, and convenience. The challenge isn't to reject these technologies but to ensure they serve human values rather than subordinating them to commercial imperatives.

This requires reconceptualising the relationship between households and AI agents. Rather than viewing smart homes as consumer products that happen to collect data, we must recognise them as sociotechnical systems that reshape domestic life, family relationships, and personal autonomy. This recognition demands different design principles, regulatory frameworks, and social norms.

Design principles should prioritise transparency, user control, and reversibility. Smart home systems should clearly communicate what data they collect, how they use it, and who can access it. Users should have granular control over data collection and device functionality, with the ability to disable specific features without losing all benefits. Design should support reversibility, allowing users to disengage from smart home systems without losing access to their homes' basic functions.

Regulatory frameworks should establish enforceable standards for data minimisation, requiring companies to collect only data necessary for providing services users explicitly request. They should mandate interoperability and data portability, preventing vendor lock-in and enabling users to switch between providers. They should create meaningful accountability mechanisms with sufficient penalties to deter privacy violations and security negligence.

Social norms around smart homes are still forming. Families, communities, and societies have opportunities to establish expectations about appropriate AI agent roles in domestic spaces. These norms might include conventions about obtaining consent from household members before installing monitoring devices, expectations for regular family conversations about technology use and boundaries, and cultural recognition that some aspects of domestic life should remain unmediated by algorithms.

Educational initiatives should help users understand smart home systems' capabilities, limitations, and implications. This includes technical literacy about how devices work and data flows, but also broader critical thinking about what values and priorities should govern domestic technology choices.

The goal isn't perfect privacy or complete autonomy; both have always been aspirational rather than absolute. The goal is ensuring that smart home adoption represents genuine choice rather than coerced convenience, that the benefits accrue to users rather than extracting value from them, and that domestic spaces remain fundamentally under residents' control even as they incorporate AI agents.

Research by family relations scholars emphasises the importance of communication and intentionality. When families approach smart home adoption thoughtfully, discussing their values and priorities, establishing boundaries and expectations, and regularly reassessing their technology choices, AI agents can enhance rather than undermine domestic life. When they adopt devices reactively, without consideration of privacy implications or family dynamics, they risk ceding control of their intimate spaces to systems optimised for corporate benefit rather than household wellbeing.

Conclusion: Writing Our Own Domestic Future

As I adjust my smart thermostat while writing this, ask my voice assistant to play background music, and let my robotic vacuum clean around my desk, I'm acutely aware of the contradictions inherent in our current moment. We live in homes that are simultaneously more convenient and more surveilled, more automated and more controlled by external actors, more connected and more vulnerable than ever before.

The question isn't whether AI agents will continue proliferating through our homes; market projections make clear that they will. The United States smart home market alone is expected to reach over $87 billion by 2032, with the integration of AI with Internet of Things devices playing a crucial role in advancement and adoption. Globally, the smart home automation market is estimated to reach $254.3 billion by 2034, growing at a compound annual growth rate of 13.7 per cent.

The question is whether this proliferation happens on terms that respect human autonomy, dignity, and the sanctity of domestic space, or whether it continues along current trajectories that prioritise corporate data collection and behaviour modification over residents' agency and privacy.

The answer depends on choices made by technology companies, regulators, researchers, and perhaps most importantly, by individuals and families deciding how to incorporate AI agents into their homes. Each choice to demand better privacy protections, to question default settings, to establish family technology boundaries, or to support regulatory initiatives represents a small act of resistance against the passive acceptance of surveillance capitalism in our most intimate spaces.

The home has always been where we retreat from public performance, where we can be ourselves without external judgement, where family bonds form and individual identity develops. As AI agents increasingly mediate these spaces, we must ensure they remain tools serving household residents rather than corporate proxies extracting value from our domestic lives.

The smart home future isn't predetermined. It's being written right now through the collective choices of everyone navigating these technologies. We can write a future where AI agents enhance human flourishing, support family relationships, and respect individual autonomy. But doing so requires vigilance, intention, and willingness to prioritise human values over algorithmic convenience.

The invisible housemate is here to stay. The question is: who's really in charge?

Sources and References

InsightAce Analytic. (2024). “AI in Smart Home Technology Market Analysis and Forecast 2024-2031.” Market valued at USD 12.7 billion in 2023, predicted to reach USD 57.3 billion by 2031 at 21.3% CAGR.

Restack. (2024). “Smart Home AI Adoption Statistics.” Number of AI-centric houses worldwide expected to exceed 375.3 million by 2024, with smart speaker users reaching 400 million.

Market.us. (2024). “AI In Home Automation Market Size, Share | CAGR of 27%.” Global market reached $20.51 billion in 2024, expected to grow to $75.16 billion by 2029 at 29.65% CAGR.

Amazon. (2024). “Introducing Alexa+, the next generation of Alexa.” Over 600 million Alexa devices in use globally, powered by generative AI.

Connectivity Standards Alliance. (2024). “Matter 1.4 Enables More Capable Smart Homes.” Version 1.4 released November 7, 2024, introducing support for batteries, solar systems, water heaters, and heat pumps.

McDaniel, Brandon T., et al. (2025). “Emerging Ideas. A brief commentary on human–AI attachment and possible impacts on family dynamics.” Family Relations, Vol. 74, Issue 3, pages 1072-1079. Approximately half of participants reported at least daily digital assistant use with moderate attachment-like behaviour.

McDaniel, Brandon T., et al. (2025). “Parent and child attachment-like behaviors with conversational AI agents and perceptions of impact on family dynamics.” Research repository, Parkview Mirro Center for Research and Innovation.

ScienceDirect. (2022). “Virtual assistants in the family home: Understanding parents' motivations to use virtual assistants with their child(dren).” Study of 305 Dutch parents with children ages 3-8 using Google Assistant-powered smart speakers.

Wiley Online Library. (2025). “Home maintainer, guardian or companion? Three commentaries on the implications of domestic AI in the household.” Family Relations, examining three distinct personas domestic AI might assume.

Brookings Institution. (2023). “The gendered division of household labor and emerging technologies.” Nearly 40% of time spent on domestic chores could be automated within next decade.

Zuboff, Shoshana. (2019). “The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power.” Harvard Business School Faculty Research. Defines surveillance capitalism as unilateral claiming of private human experience as raw material for behavioural data.

Harvard Gazette. (2019). “Harvard professor says surveillance capitalism is undermining democracy.” Interview with Professor Shoshana Zuboff on surveillance capitalism's impact.

YouGov. (2021). Survey finding approximately 60-70% of UK adults believe smartphones and smart speakers listen to conversations unprompted.

PwC. Study finding 40% of voice assistant users have concerns about voice data handling.

IMDEA Networks and Northeastern University. (2024). Research on security and privacy challenges posed by IoT devices in smart homes, finding inadvertent exposure of sensitive data including device names, UUIDs, and household geolocation.

ACM Digital Library. (2018). “Alexa, Are You Listening?: Privacy Perceptions, Concerns and Privacy-seeking Behaviors with Smart Speakers.” Proceedings of the ACM on Human-Computer Interaction, Vol. 2, No. CSCW. Found 91% experienced unwanted Alexa recording; 29.2% contained sensitive information.

PacketLabs. Security researcher Matt Kunze's discovery of Google Home speaker flaw enabling backdoor account installation; awarded $107,500 by Google.

Nature Communications. (2024). “Inevitable challenges of autonomy: ethical concerns in personalized algorithmic decision-making.” Humanities and Social Sciences Communications, examining algorithmic decision-making's impact on user autonomy.

arXiv. (2025). “Families' Vision of Generative AI Agents for Household Safety Against Digital and Physical Threats.” Study with 13 parent-child dyads investigating attitudes toward AI agent-assisted safety management.

Orlowski, Alexander and Loh, Wulf. (2025). “Data autonomy and privacy in the smart home: the case for a privacy smart home meta-assistant.” AI & Society, Volume 40. International Center for Ethics in the Sciences and Humanities (IZEW), University of Tuebingen, Germany. Received March 26, 2024; accepted January 10, 2025.

Frontiers in Digital Health. (2025). “Designing for dignity: ethics of AI surveillance in older adult care.” Research arguing technologies must begin with moral commitment to human dignity.

BMC Geriatrics. (2020). “Are we ready for artificial intelligence health monitoring in elder care?” Examining ethical concerns including erosion of privacy and dignity, finding older adults' risk perceptions differ from caregivers'.

MDPI Applied Sciences. (2024). “AI-Driven Privacy in Elderly Care: Developing a Comprehensive Solution for Camera-Based Monitoring of Older Adults.” Vol. 14, No. 10. Research on avatar-based anonymisation systems.

University of Michigan. (2024). “PrivacyMic: For a smart speaker that doesn't eavesdrop.” Development of ultrasonic sound-based system enabling smart home functionality without eavesdropping.

PMC. (2021). “Parents' Perspectives on Using Artificial Intelligence to Reduce Technology Interference During Early Childhood: Cross-sectional Online Survey.” Study finding parents reported mean of 3.03 devices interfered daily with child interactions.

Markets and Markets. (2023). Global smart baby monitor market valued at approximately $1.2 billion in 2023, projected to reach over $2.5 billion by 2030.

Global Market Insights. (2024). “Smart Home Automation Market Size, Share & Trend Report, 2034.” Market valued at $73.7 billion in 2024, estimated to reach $254.3 billion by 2034 at 13.7% CAGR.

Globe Newswire. (2024). “United States Smart Home Market to Reach Over $87 Billion by 2032.” Market analysis showing integration of AI with IoT playing crucial role in advancement and adoption.

Matter Alpha. (2024). “2024: The Year Smart Home Interoperability Began to Matter.” Analysis of Matter protocol's impact on smart home compatibility.

Connectivity Standards Alliance. (2024). “Matter 1.3” specification published May 8, 2024, adding support for water and energy management devices and appliance support.

Tim Green UK-based Systems Theorist & Independent Technology Writer

Tim explores the intersections of artificial intelligence, decentralised cognition, and posthuman ethics. His work, published at smarterarticles.co.uk, challenges dominant narratives of technological progress while proposing interdisciplinary frameworks for collective intelligence and digital stewardship.

His writing has been featured on Ground News and shared by independent researchers across both academic and technological communities.

ORCID: 0009-0002-0156-9795 Email: tim@smarterarticles.co.uk

#HumanInTheLoop #SmartHomeAI #PrivacyEthics #DomesticAutomation